IMSL_CONSTRAINED_NLP

The IMSL_CONSTRAINED_NLP function solves a general nonlinear programming problem using a sequential equality constrained quadratic programming method.

This routine requires an IDL Advanced Math and Stats license. For more information, contact your sales or technical support representative.

The IMSL_CONSTRAINED_NLP function provides an interface to a licensed version of subroutine DONLP2, a code developed by Peter Spellucci (1998). It uses a sequential equality constrained quadratic programming method with an active set technique, and an alternative usage of a fully regularized mixed constrained subproblem in case of nonregular constraints (for example, linear dependent gradients in the "working sets"). It uses a slightly modified version of the Pantoja-Mayne update for the Hessian of the Lagrangian, variable dual scaling and an improved Armjijo-type stepsize algorithm. Bounds on the variables are treated in a gradient-projection manner. Details may be found in the following two papers:

- Spellucci, P. "An SQP Method for General Nonlinear Programs Using Only Equality Constrained Subproblems." Mathematical Programming 82 (1998): 413-448.

- Spellucci, P. "A New Technique for Inconsistent Problems in the SQP Method." Mathematical Methods of Operations Research 47 (1998): 355-500. Published by Physica Verlag, Heidelberg, Germany.

The problem is stated as follows:

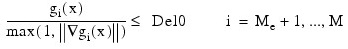

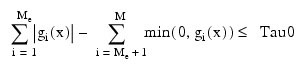

subject to:

Although default values are provided for input keywords, it may be necessary to adjust these values for some problems. Through the use of keywords, IMSL_CONSTRAINED_NLP allows for several parameters of the algorithm to be adjusted to account for specific characteristics of problems. The DONLP2 Users Guide provides detailed descriptions of these parameters as well as strategies for maximizing the performance of the algorithm. The DONLP2 Users Guide is available in the manuals subdirectory of the main product installation directory. In addition, the following are guidelines to consider when using IMSL_CONSTRAINED_NLP.

- A good initial starting point is very problem-specific and should be provided by the calling program whenever possible. For more details, see the keyword XGUESS.

- Gradient approximation methods can have an effect on the success of IMSL_CONSTRAINED_NLP. Selecting a higher order approximation method may be necessary for some problems. For more details, see the keyword DIFFTYPE.

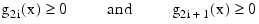

- If a two-sided constraint:

is transformed into two constraints:

then choose:

Or at least try to provide an estimate for that value. This will increase the efficiency of the algorithm. For more details, see the keyword DEL0.

- The parameter ierr provided in the interface to the user supplied function f can be very useful in cases when evaluation is requested at a point that is not possible or reasonable. For example, if evaluation at the requested point would result in a floating point exception, then setting ierr to 1 and returning without performing the evaluation will avoid the exception. IMSL_CONSTRAINED_NLP will then reduce the stepsize and try the step again. Note, if ierr is set to 1 for the initial guess, then an error is issued.

Example

The problem:

min F(x) = (x1 – 2)2 + (x2 – 1)2

subject to:

g1(x) = x1 – 2x2 + 1 = 0

g2(x) = – x2/4 – x22 + 1 ≥ 0

is solved first with finite difference gradients, then with analytic gradients.

PRO Nlp_grad, x, iact, result

CASE iact OF

0:result = [ 2 * (x(0) - 2.), 2 * (x(1)-1.)]

1:result = [1., -2. ]

2:result = [-0.5*x(0), -2.0*x(1)]

ENDCASE

RETURN

END

PRO Nlp_fcn, x, iact, result, ierr

tmp1 = x(0)-2.

tmp2 = x(1) - 1.

CASE iact OF

0:result = tmp1^2 + tmp2^2

1:result = x(0) -2.*x(1) + 1.

2:result = -(x(0)^2)/4. - x(1)^2 + 1.

ENDCASE

ierr = 0

END

; Ex #1, Finite difference gradients

ans1 = IMSL_CONSTRAINED_NLP('nlp_fcn', 2, 2, MEQ = 1)

PM, ans1, title='X with finite difference gradient'

; Ex #2, Analytic gradients

ans2 = IMSL_CONSTRAINED_NLP('nlp_fcn', 2, 2, MEQ = 1, $

GRAD = 'nlp_grad')

PM, ans2, title='X with Analytic gradient'

IDL prints:

X with finite difference gradient

0.822877

0.911439

X with Analytic gradient

0.822877

0.911438

Syntax

Result = IMSL_CONSTRAINED_NLP (f, m, n [, /DOUBLE] [, DEL0=value] [, DELMIN=string] [, DIFFTYPE=value] [, EPSDIF=value] [, EPSFCN=value] [, GRAD=value] [, IBTYPE=string] [, ITMAX=value] [, MEQ=value] [, OBJ=value] [, SCFMAX=string] [, SMALLW=string] [, TAU0=value] [, TAUBND=value] [, XGUESS=array] [, XLB=variable] [, XSCALE=vector] [, XUB=variable])

Return Value

The solution of the nonlinear programming problem.

Arguments

f

Scalar string specifying a user-supplied procedure to evaluate the objective function and constraints at a given point. The input parameters are:

- x: One dimensional array at which the objective function or a constraint is evaluated.

- iact: Integer indicating whether evaluation of the objective function is requested or evaluation of a constraint is requested. If iact is zero, then an objective function evaluation is requested. If iact is nonzero then the value of iact indicates the index of the constraint to evaluate.

- result: If iact is zero, then Result is the computed objective function at the point x. If iact is nonzero, then Result is the requested constraint value at the point x.

- ierr: Integer variable. On input, ierr is set to 0. If an error or other undesirable condition occurs during evaluation, then ierr should be set to 1. Setting ierr to 1 will result in the step size being reduced and the step being tried again. (If ierr is set to 1 for XGUESS, then an error is issued.)

m

Total number of constraints.

n

Number of variables.

Keywords

DOUBLE (optional)

If present and nonzero, then double precision is used.

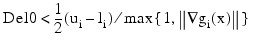

DEL0 (optional)

In the initial phase of minimization, a constraint is considered binding if:

Good values are between .01 and 1.0. If DEL0 is too small, then identification of the correct set of binding constraints may be delayed. Conversely, if DEL0 is too large, then the method will often escape to the full regularized SQP method. This method uses individual slack variables for any active constraint, which is quite costly. For well-scaled problems, DEL0 = 1.0 is reasonable. The default value is 0.5* TAU0.

DELMIN (optional)

Scalar which defines allowable constraint violations of the final accepted result. Constraints are satisfied if |gi(x)| is less than or equal to DELMIN, and gi(x) is greater than or equal to (-DELMIN), respectively. The default value is min(DEL0/10, max(EPSDIF, min(DEL0/10, max(1.E-6* DEL0, SMALLW)).

DIFFTYPE (optional)

Type of numerical differentiation to be used. The default value is 1.

- 1: Use a forward difference quotient with discretization stepsize 0.1(EPSFCN1/2) component-wise relative.

- 2: Use the symmetric difference quotient with discretization stepsize 0.1(EPSFCN1/3) component-wise relative.

- 3: Use the sixth order approximation computing a Richardson extrapolation of three symmetric difference quotient values. This uses a discretization stepsize 0.01(EPSFCN1/7).

This keyword is not valid if the keyword GRAD is supplied.

EPSDIF (optional)

Relative precision in gradients. The default value is the machine precision. This keyword is not valid if the keyword GRAD is supplied.

EPSFCN (optional)

Relative precision of the function evaluation routine. The default value is the machine precision. This keyword is not valid if the keyword GRAD is supplied.

GRAD (optional)

Scalar string specifying a user-supplied procedure to evaluate the gradients at a given point. The procedure specified by GRAD has the following parameters:

- x: One dimensional array at which the gradient of the objective function or gradient of a constraint is evaluated.

- iact: Integer indicating whether evaluation of the gradient of the objective function is requested or evaluation of gradient of a constraint is requested. If iact is zero, then an objective function evaluation is requested. If iact is nonzero then the value if iact indicates the index of the constraint to evaluate.

- result: If iact is zero, then Result is the computed gradient of the objective function at the point x. If iact is nonzero, then Result is the gradient of the requested constraint value at the point x.

IBTYPE (optional)

Scalar indicating the types of bounds on variables.

- 0: User supplies all the bounds.

- 1: All variables are non-negative.

- 2: All variables are nonpositive.

- 3: User supplies only the bounds on first variable; all other variables have the same bounds.

- Default: no bounds are enforced

ITMAX (optional)

Maximum number of iterations allowed. The default value is 200.

MEQ (optional)

Number of equality constraints. The default value is m.

OBJ (optional)

Name of a variable into which a scalar containing the value of the objective function at the computed solution is stored.

SCFMAX (optional)

Scalar containing the bound for the internal automatic scaling of the objective function. The default value is 1.0e4.

SMALLW (optional)

Scalar containing the error allowed in the multipliers. For example, a negative multiplier of an inequality constraint is accepted (as zero) if its absolute value is less than SMALLW. The default value is exp(2*log(eps/3)) where eps is the machine precision.

TAU0 (optional)

A universal bound describing how much the unscaled penalty-term may deviate from zero. IMSL_CONSTRAINED_NLP assumes that within the region described by:

all functions may be evaluated safely. The initial guess, however, may violate these requirements. In that case, an initial feasibility improvement phase is run by IMSL_CONSTRAINED_NLP until such a point is found. A small TAU0 value diminishes the efficiency of IMSL_CONSTRAINED_NLP because the iterations will closely follow the boundary of the feasible set. Conversely, a large TAU0 value may degrade the reliability of the code. The default value is 1.0.

TAUBND (optional)

Amount by which bounds may be violated during numerical differentiation. Bounds are violated by TAUBND (at most) only if a variable is on a bound and finite differences are taken for gradient evaluations. This keyword is not valid if the keyword GRAD is supplied. The default value is 1.0.

XGUESS (optional)

Array with n components containing an initial guess of the computed solution. The default value is X, with the smallest value of ||X||2 that satisfies the bounds.

XLB (optional)

Named variable containing a one-dimensional array with n components, containing the lower bounds on the variables. (Input, if IBTYPE = 0; Output, if IBTYPE = 1 or 2; Input/Output, if IBTYPE = 3). If there is no lower bound on a variable, the corresponding XLB value should be set to negative machine infinity. The default is that no lower bounds are enforced on the variables.

XSCALE (optional)

Vector of length n setting the internal scaling of the variables. The initial value given and the objective function and gradient evaluations however are always in the original unscaled variables. The first internal variable is obtained by dividing values x(I) by XSCALE(I). This keyword is not valid if the keyword GRAD is supplied. In the absence of other information, set all entries to 1.0. Default: XSCALE(*) = 1.0.

XUB (optional)

Named variable containing a one-dimensional array with n components, containing the upper bounds on the variables. (Input, if IBTYPE = 0; Output, if IBTYPE = 1 or 2; Input/Output, if IBTYPE = 3). If there is no upper bound on a variable, the corresponding XUB value should be set to positive machine infinity. The default is that no upper bounds are enforced on variables.

Version History

|

6.4 |

Introduced |